FitBlendRig

FitBlendRig

Only in Wrap4D

Computes control values that will allow the Blendshape Rig to best match the target facial expression. In addition to vertex matching, uses matching the shape of the target facial expression, as well as copying the degree of closure of the eyes and mouth.

Inputs

- Blend Rig

BlendRigBlendshape Rig to optimize control values.- Target Geometry

GeometryTarget geometry that the Blendshape Rig should match. The inverted BlendshapeRig neutral geometry transform will be applied to the target geometry.- Fitting Vertex Mask

VertexMask(optional) Optional parameter. The vertex mask that determines which vertices control values will be optimized. If it is not specified, the vertex mask consists of ones.

Tip

To improve the fit to the target facial expression, you should exclude the corners of the eyes, lips, as well as eyelid folds from the fit mask.

- Distance Constrained Points

PointCorrespondences(multiple) Optional parameter. The point pairs on the geometry that define the closure lines of the lip and eye.- Target Left Eye Transform

GeometryOptional parameter. The left eye geometry located at the origin, directed along the Z axis with an applied rotation transform (the transform is also applied at the origin).- Target Right Eye Transform

GeometryOptional parameter. The right eye geometry located at the origin, directed along the Z axis with an applied rotation transform (the transform is also applied at the origin).

Outputs

- Blendshape Rig

BlendRigBlendshape Rig with optimized control values.

Parameters

- Compute:

starts computing process.

- Auto-Compute:

if set, starts computing process whenever any parameter of the node was changed.

- Make Prefit:

if set, starts computing the simplified problem before the main problem. Used to find good initial control values. If Use Current Controls as Init is set, prefit starts using input Blendshape Rig control values. If you want to use initial Blendshape Rig control values as initial control values for the main problem, you should disable Make Prefit parameter.

- Use Current Controls As Init:

if set, the input rig control values will be used as initial control values for the main problem. If disabled, zero controls will be used as initial control values.

- Vertex Weight:

the weight of the per-vertex matching error.

- Shape Weight:

the weight of the shape error. Fits Blendshape Rig to the shape of the target expression. It improves the result in case the Blendshape Rig is not able to reproduce the required facial deformation per vertex (almost always). When the parameter is disabled, optimization will use all possible controls in order to match the target facial expression per vertex.

- Shape Angle Threshold (Degrees):

the minimum angle value for a triangle to be used in the shape matching. This is necessary due to the fact that shape error produces incorrect results on triangles where the value of one of the angles is very small. This often happens on the folds of the eyelids, on the corners of the eyes and lips.

- Initial Control Weight:

the weight for result controls values matching initial controls values. If Use Current Controls As Init is set, the optimization process will match the result control values to the input control values. If Make Prefit is set, the optimization process will match the result control values to prefit result. In other cases optimization process will match the result control values to zeros.

- Point Weight:

the weight for copying the distance between point pairs defining the closure lines of lips and eyes. For each pair, it decreases linearly depending on the distance between the points.

- Point Activation Max Distance:

the maximum distance at which copying the distance between pairs of points works.

- Iterations:

the maximum number of iterations during which the problem will be solved. Optimization often stops before reaching the maximum number of iterations.

- Include Controls:

whitelist of control names to fit, separated by comma. Example:

JawOpen, Funnel, Blink.- Exclude Controls:

blacklist of control names, separated by comma. Not included controls are not fitted. The values of these controls depend on the Use Current Controls As Init parameter.

Note

Blacklist overrides whitelist. If each list contains one control name, this control value will not be fitted. If the control name is not on any of the lists, this control will be excluded.

Note

In whitelist and blacklist control name depends on the order of the suffixes. For example, if you will write Jaw_L_Left, it will not select Jaw_Left_L control value.

Tip

You can use special characters to simplify whitelist and blacklist. ? equals to one arbitrary character. For example Jaw_?_Left selects Jaw_L_Left and Jaw_R_Left control values. * equals to any number of arbitrary characters. For example Jaw* selects each control value, starting with Jaw: JawOpenExtreme, Jaw_R_Left, JawOpen, Jaw_Right_L; * list value selects all control values.

- Control Names to Regularization Weights:

the list of weights and control names, whose values should be minimized. This can be used when the blendshape is similar to a neutral facial expression, but it is involved in corrections. Corresponding control values can be changed without any reason, and regularization solves this problem. Example of the Control Names to Regularization Weights parameter value:

{

"LipsTogether": 6,

"JawOpenExtreme": 5

}

Note

In Control Names to Regularization Weights control name depends on the order of the suffixes. For example, if you will write Jaw_L_Left, it will not select Jaw_Left_L control value.

- Fit Eyes Using Transforms:

if set, the controls responsible for eye rotations are not optimized by the main optimization, but will be computed using input eye transforms.

- Left Eye Left Angle:

the maximum angle of rotation of the left eye to the left side, corresponding to the weight 1 of the blendshape.

- Left Eye Right Angle:

the maximum angle of rotation of the left eye to the right side, corresponding to the weight 1 of the blendshape.

- Left Eye Up Angle:

the maximum angle of rotation of the left eye to the up side, corresponding to the weight 1 of the blendshape.

- Left Eye Down Angle:

the maximum angle of rotation of the left eye to the down side, corresponding to the weight 1 of the blendshape.

- Right Eye Left Angle:

the maximum angle of rotation of the right eye to the left side, corresponding to the weight 1 of the blendshape.

- Right Eye Right Angle:

the maximum angle of rotation of the right eye to the right side, corresponding to the weight 1 of the blendshape.

- Right Eye Up Angle:

the maximum angle of rotation of the right eye to the up side, corresponding to the weight 1 of the blendshape.

- Right Eye Down Angle:

the maximum angle of rotation of the right eye to the down side, corresponding to the weight 1 of the blendshape.

- Left Eye Side Control Name:

the name of the control corresponding to the horizontal rotation of the left eye.

- Left Eye Vertical Control Name:

the name of the control corresponding to the vertical rotation of the left eye.

- Right Eye Side Control Name:

the name of the control corresponding to the horizontal rotation of the right eye.

- Right Eye Vertical Control Name:

the name of the control corresponding to the vertical rotation of the right eye.

Note

Fitting has two implementations: for Blendshape Rig with relaxation masks and without relaxation masks. Implementation for Blendshape Rig without relaxations masks works faster than other implementation.

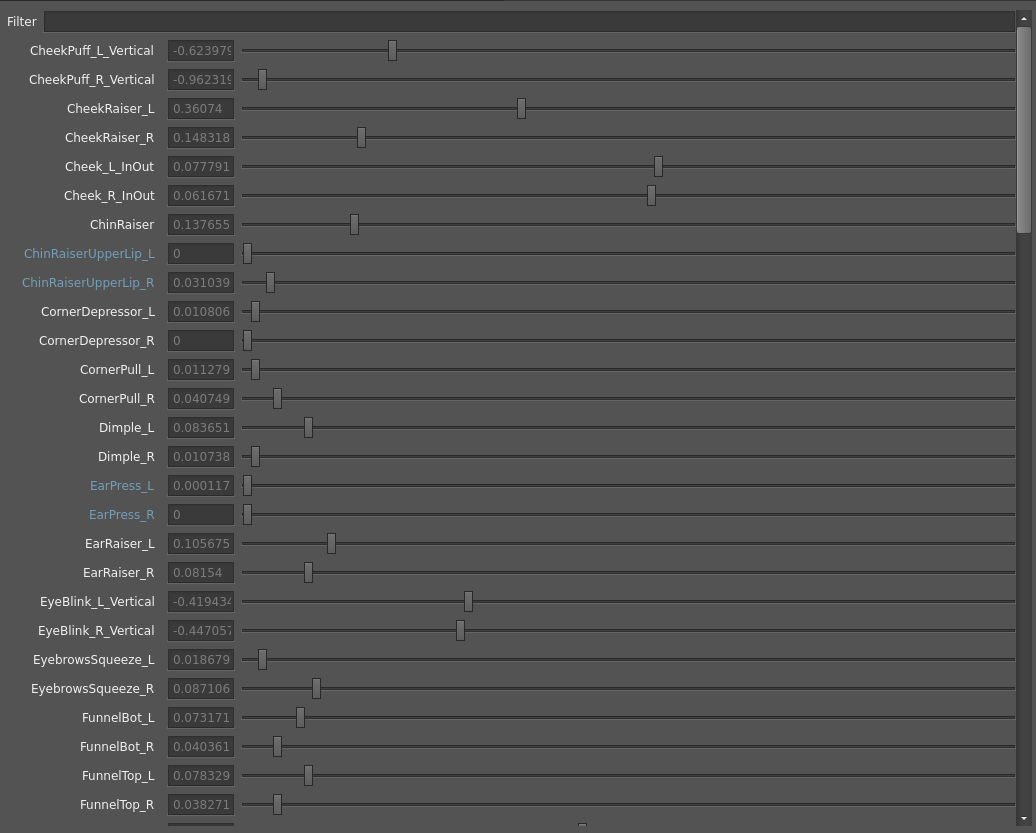

Visual Editor

The visual editor displays a list of output Blendshape Rig controls. In the visual editor, special controls are highlighted:

Fitted controls are white.

Excluded controls are gray.

Regularized controls are green.

Controls responsible for the eye rotations are green.

Copying mouth and eye closures from the target geometry

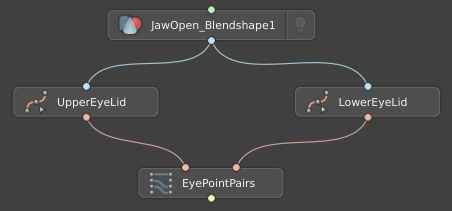

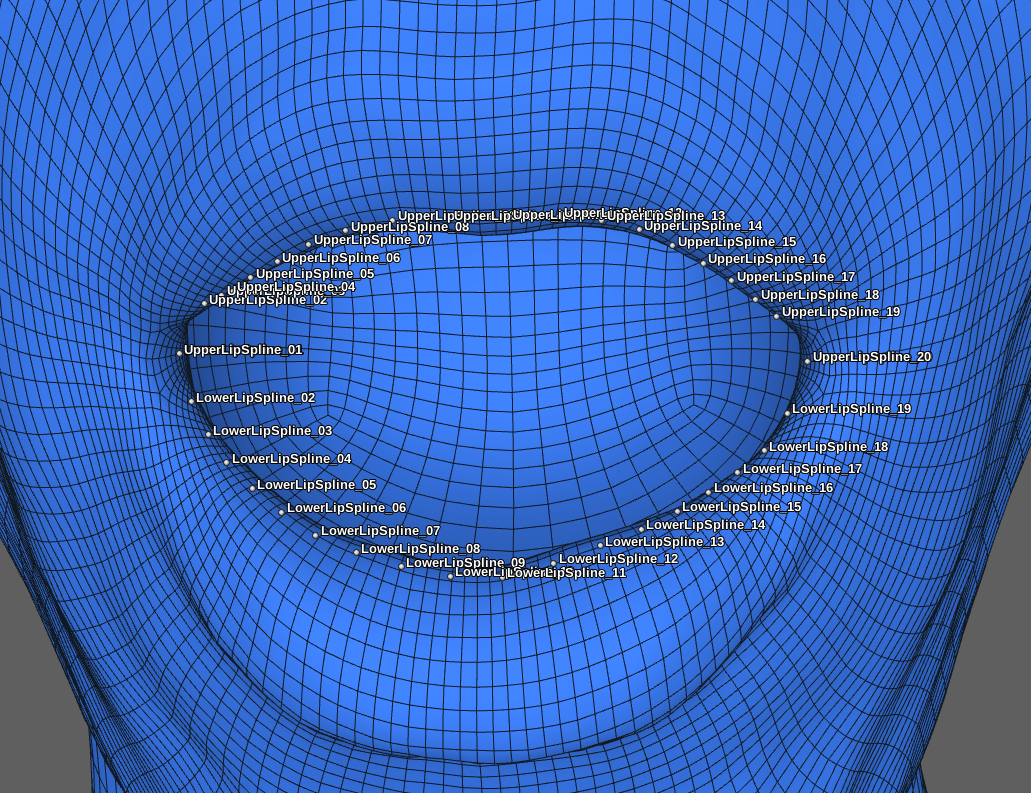

Named points on triangles are used to copy the degree of closure of the mouth and eyes. This is a multiple input, each input corresponds to one area of closure: lips, left eye or right eye. The structure of the points is described below. First there are points on one side of the lip or eyes (top or bottom), then there are corresponding points on the other side. To do this, we create two SelectPointsWithSplines nodes, in the first we select points on the line of closure of the lower lip or eyelid, in the second node we select points on the line of closure of the upper lip or eyelid. The splines in the two nodes must have different names, and the number of points in each spline (defined by the parameter Num Points Per Spline) must be the same. After that, we create a PointsToPointPairs node and merge the selected points. Check points matching correctness in the visual editor of the PointsToPointPairs node (in case of eyes and lips in each pair the points must be located one above the other to ensure points closeness when eye or mouth is closed).

The example of a points computing graph is shown below.

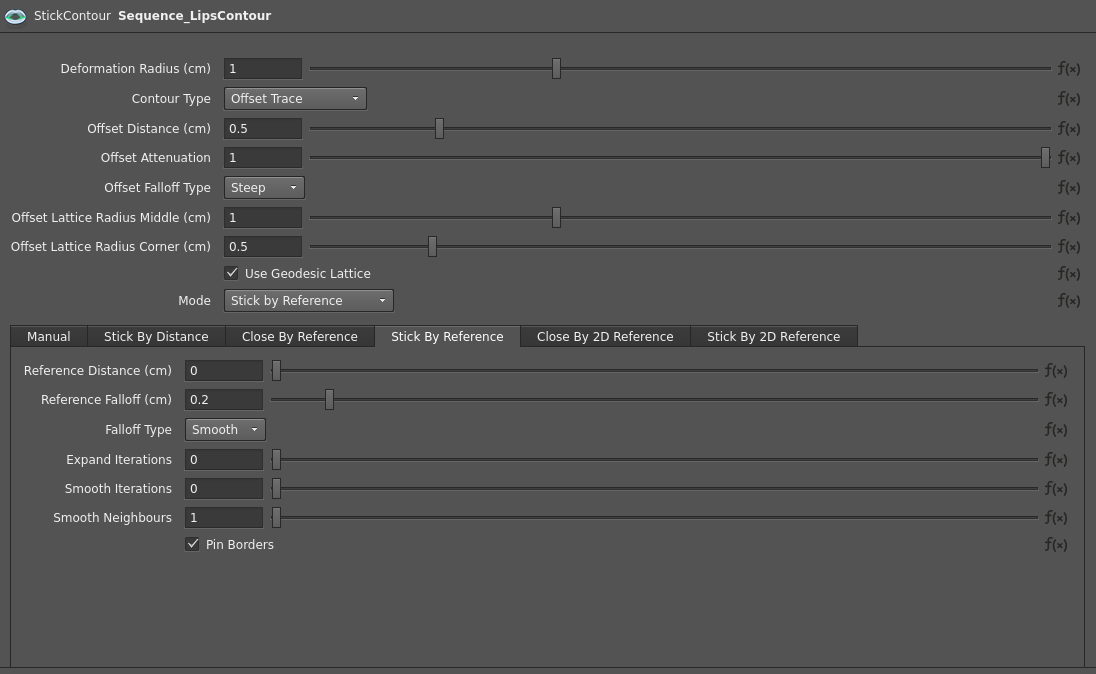

The example of result eye points is shown below.

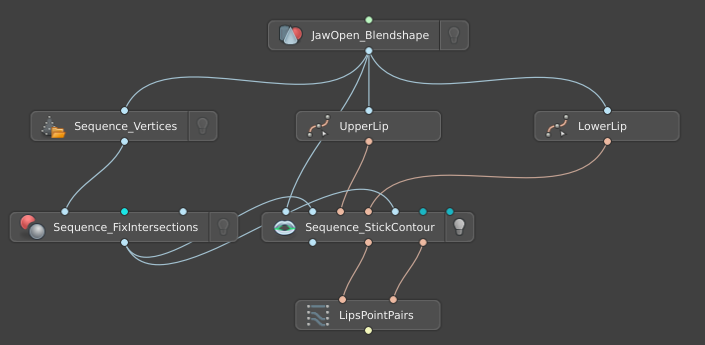

To get lip splines, we also use the StickContour node. This is due to the fact that the lip intersection line can change depending on the emotion, and the StickContour node defines the actual lip closure line for the geometry. In the StickContour node, as a Template Geom, we set the actor’s facial expression with an open jaw, as a Deformable Geom and Reference Geom, we set the target expression, to which the FixIntersections node is optionally applied. An example of node parameters is shown below. When setting up a node, test it on various cases, and make sure that the upper and lower splines lie as close to each other as possible in cases of closed mouth (for example, using a visual editor). The outputs of the node First Calculated Reference Contour and Second Calculated Reference Contour are connected to the PointsToPointPairs node.

An example of used StickContour node parameters is shown below.

The example of a lip splines computing graph is shown below.

The example of result lips points is shown below.

Computing control values, responsible for the gaze direction

If the Fit Eyes Using Transforms parameter is disabled, the weights of the gaze direction control values are computed in the same way as the other control values. Otherwise, the gaze direction control values are computed for the following deformation model: each eye first rotates around the X axis by the maximum angle for a given direction multiplied by the control value, then rotates around the Y axis by the maximum angle for a given direction multiplied by the control value. The gaze direction controls values are computed from the input eye transforms, and it is required that the eye rotation transforms be applied to the eye geometry positioned at the origin and directed along the Z axis. More information about eye transforms can be found in the documentation of the FitEyesDirection node.

Using multiple FitBlendRig nodes to reduce the jitter of the result.

There may be some jitter in the results of the Blendshape Rig fit. The frequency of jitters of the result depends on how accurately the Blendshape Rig is able to reproduce the target emotions, depending on the stabilization of the target emotions, secondary dynamics. Jitters appear because the problem is simultaneously solved in three directions: per-vertex matching to the target expression, matching to the shape of target expression, copying mouth and eye closures from the target geometry, and improving a solution in one direction may worsen the solution in other directions. Because of this, the solution for two similar target facial expressions may differ. We sequentially use three FitBlendRig nodes to obtain results with minimal jitter:

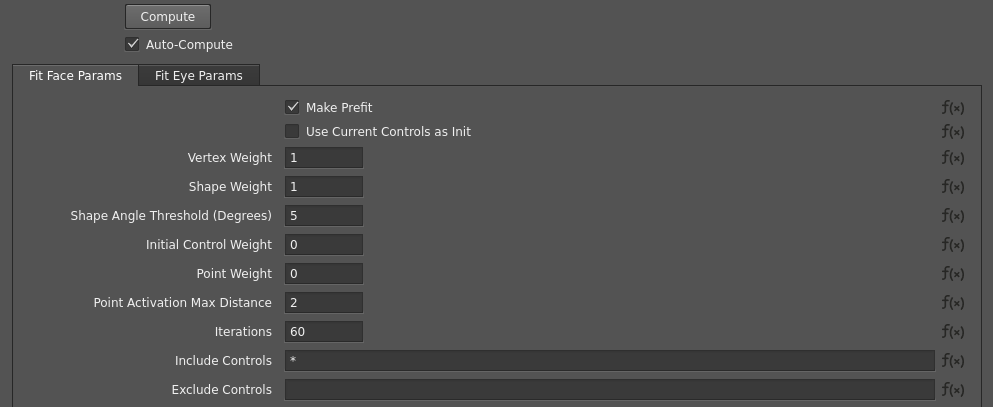

A node without using Copying mouth and eye closures. The Pots Weight parameter is set to 0, the prefit is used, the Initial Control Weight parameter is set to 0. An example of the parameters is shown below.

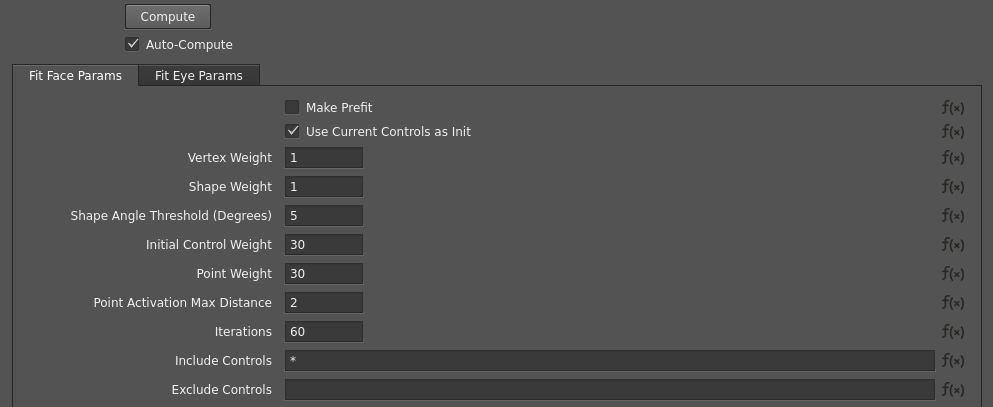

A node without using copying mouth and eye closures. Previous FitBlendRig node output is connected to this node. The Pots Weight parameter is set to 30, Make prefit is disabled, the Initial Control Weight parameter is set to 30, Use Current Controls As Init is enabled. This node copies the degree of closure of the mouth and eyes, trying to change control values of the first step as little as possible. An example of the parameters is shown below.

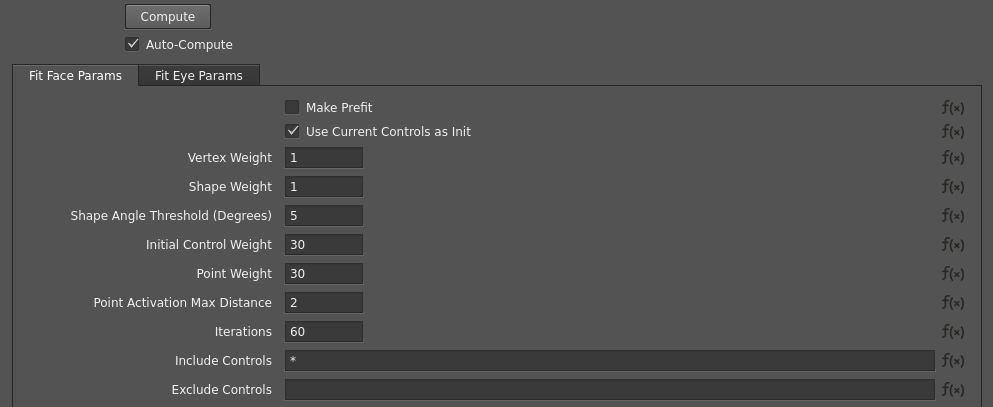

A node starting from smoothed control values. For this step, you first need to completely compute the fit of all frames, then load the control values in another project using the SmoothBlendRigControls node and connect to the FitBlendRig. In this FitBlendRig node the parameters are similar to the FitBlendRig node from the second step.

Tip

When smoothing control values, add controls responsible for the gaze direction to the Exclude Controls parameter of the SmoothBlendRigControls node.

Troubleshooting

Bad fit into target expression

In a Blendshape Rig fit, it is difficult to determine the source of the problem using only visualization of the result, so we suggest doing several test runs of the node on problematic frames with changed settings in order to identify the problem and suggest solutions.

In the existing settings, set the Shape Weight parameter value to zero. If the problem is solved, it means that there are collapsing triangles in the neutral geometry of the Blendshape Rig. These are triangles that have an extremely small minimum angle. To solve it, you need to increase the value of the Shape Angle Threshold (Degrees) parameter until the fit result is correct. This setting must be done once per character.

In the existing settings, set the Pots Weight parameter value to zero. If the problem is solved, then first check the splines that are connected to the node input for compliance with the requirements described above. If the problem occurs on the lips, check the splines computed using the StickContour node and change the settings of the StickContour node if necessary. You can also set a lower weight of the parameter, because if the parameter is too big, it will make the problem harder to solve.

In the existing settings, set the Control Name To Regulation Weight parameter value to

{}, the Include Controls parameter value to*, the Exclude Controls parameter value to"", because these parameters make it difficult for the Blendshape Rig to match with the target expression. If this helps, then it is necessary to weaken these parameters for a more accurate match.

The lips do not close completely, as required by the target facial expression.

First of all, it is necessary to make sure that the splines defining the lip closure lines lie on the line of closure of this emotion. You can check it in the visual editor of the StickContour node. There are two main solutions to solve the problem: shifting the position of the splines in loops forward and backward in the node SelectPointsWithSplines; changing the settings of the StickContour node.